Research Projects

STARK – Spielerische Therapieunterstützung mit adaptivem Realitätsgrad für Kinder

The joint project “STARK (Spielerische Therapieunterstützung mit adaptivem Realitätsgrad für Kinder)” (English: Playful therapy support with adaptive reality level for children) aims to develop and test an interactive digital and immersive technology utilizing evidence-based methods for treating depressive disorders in children aged 9-12 as a low-barrier and rapidly available intervention over 12 weeks, which can bridge the waiting time for a therapy appointment as part of a stepped care approach.

Children should learn through play that thinking, feeling, and acting are closely related. They should also learn to recognize, name, and regulate their feelings. To this end, they are placed in various situations in virtual worlds in which they perform stimulating or relaxing tasks.

To achieve thids, STARK uses a mixed reality system to provide technological support for therapeutic approaches such as understanding the emotion triangle, recognizing, naming, and regulating emotions, activating resources, and building positive activities. The STARK system aims to support children in dealing with emotions in a multisensory way (auditory, visual, haptic) by combining mixed reality technologies with gamification approaches. For introspection, a safe virtual place should be created, which makes it possible to collect and activate one’s own resources as treasures.

Contact: Jonathan Kutter

SPIELEND – Soziale Präsenz durch immersive, emotionale und lebendige Erfahrungen von Nähe auf Distanz

The project SPIELEND (English: Playfully Creating Presence through Immersive, Emotional, and Lifelike Experiences of Closeness Across Distance) explores how Augmented Reality can be used to create a sense of social presence and emotional connection through shared play. The project develops and studies playful mixed-reality environments in which people can interact, communicate, and collaborate across physical distance as if they were in the same room.

SPIELEND investigates how different visual representations of remote partners, shared hybrid spaces, and tangible feedback—such as light and vibration—shape feelings of closeness and togetherness. These findings inform the design of AR-based games and interactive prototypes that merge virtual and physical elements into shared experiences.

Combining expertise from human–computer interaction, textile technology, and game design, the project brings together the University of Wuppertal, the University of Würzburg, the Institut für Textiltechnik of RWTH Aachen University, Augmented Robotics in Berlin, and the OFFIS Institute for Information Technology in Oldenburg. Together, the partners explore how immersive technologies can transform distant communication into playful and emotionally rich interaction.

Contact: Jonah-Noel Kaiser

SKIRIM: Self-actuated Kinetic Interaction with Rich Interactive Materials

Before the advent of smart homes, the toaster was the only thing that suddenly moved on its own by ejecting its slices. Today, this has changed drastically: blinds glide open, dishwasher doors open themselves, and robot vacuums roam around the house. Yet, these kinds of movements and shape change are designed solely with technical considerations in mind, and can feel out of place in the private home environment.

SKIRIM (pronounced “sky-rim”) explores how soft, shape-shifting materials can create effective but unobtrusive user interfaces (UIs) that feel natural in everyday life. SKIRIM is taking the next step in merging the digital and physical in the private home and is moving from passive, interactive everyday interfaces to soft, independent shape-changing user interfaces that dynamically change their physical form to communicate, guide, and respond. Although shape-changing materials are increasingly available today, interaction techniques, models of their effect on people, design tools, manufacturing processes, and UI design guidelines are missing, especially for soft, shape-changing UIs in the home environment.

In SKIRIM project, we investigate in which smart home scenarios such user interfaces make sense, develop predictive models for the effect of movement/shape-change parameters on users (speed, size, duration), research new digital manufacturing approaches, create and evaluate tools for both experts and end users to design and configure SKIRIM UIs.

Contact: Pavel Karpashevich

Health Care Innovation Centre

(PIZ – Pflegeinnovationszentrum)

The PIZ project started in 2018 as part of a nation wide cluster that explores the use of technology to improve the quality of life of all caregivers and patients.

We are investigating novel interaction methods and visualization techniques for Augmented and Virtual Reality in the nursing context. To this end, we have developed virtual representations of our in-lab laboratories, each representing a caregiving environment (i.e., ICU, nursing home, nursing administration, ambulant care).

Of special interest to is the simulation of stressors in this context, such as audible alarms, time pressure, or emotional distress. Using physiological values (e.g. heart rate, breathing frequency) and questionnaires we investigate the effect of VR based clones of these stressors and evaluate the results in empirical lab studies and explore possible use cases in nursing education.

Main Research Topics

- AR Visualisation of electronic patient data and vital signs

- Immersive training for stressful environments in nursing

- Reducing alarms on Intensive Care Units

- Novel techniques for medical care

Contact: Simon Kimmel

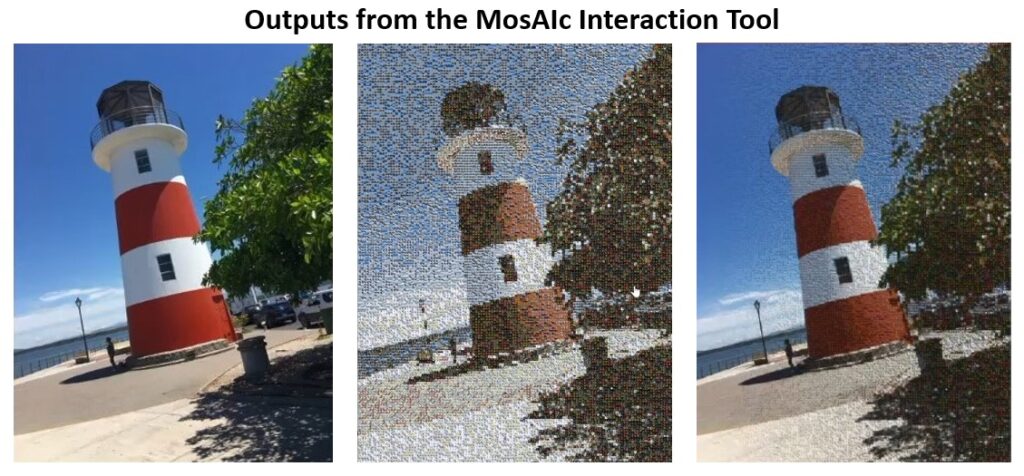

R&D for Photo Analysis and Organization Through AI

In collaboration with a leading company in the photo industry, we are researching, designing and developing innovative digital photo products. This project is located within the Human-Centered AI group and therefore has a strong focus on a human-centered approach to AI, Machine Learning, and Deep Learning. We are exploring different approaches to AI-powered software development for digital photo products, with a particular emphasis on human creativity and co-creativity with AI systems. An important question that we address is how these AI-based tools can be utilized to foster users’ creativity. We concentrate on human empowerment and pursue the idea that AI-based systems can augment and enhance human creativity, rather than replace it. These and other exploratory efforts foster exchanges between industry and research. Successful implementations often result in products of the collaboration partner.

Digitopias – Digital Technologies for Participation and Interaction in Society

Taking on the task of actively shaping digitalisation of society, in Digitopias, we do HCI-research in four sub-projects, containing exemplary technological solutions for selected social challenges: (1) social proximity over spatial distance, (2) sustainability in shopping behaviour, (3) human-AI-collaboration in production and (4) citizen participation in political decision-making. Common to all sub-projects is the participatory research approach, in which citizens and users are involved in the research process from the very beginning. A cross-sectional fifth sub-project continuously implements measures for dialogue and collaboration between society and researchers. In addition, the prerequisites and factors for successful science transfer are systematically researched.

Main Research Topics

- Mixed Reality for Social Presence over Distance

- Digital Interventions for Sustainable Online Shopping

- Explainable AI for Human-AI Collaboration

- Digital Technologies for Citizen Access to Public Participation

- Transfer Management for Science Transfer

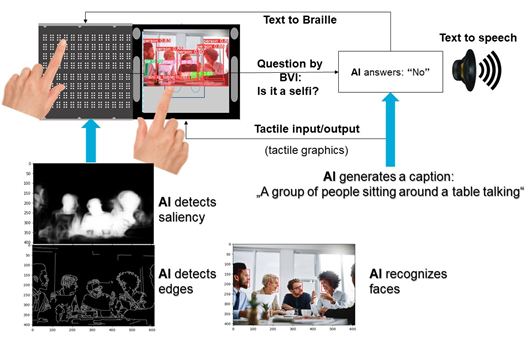

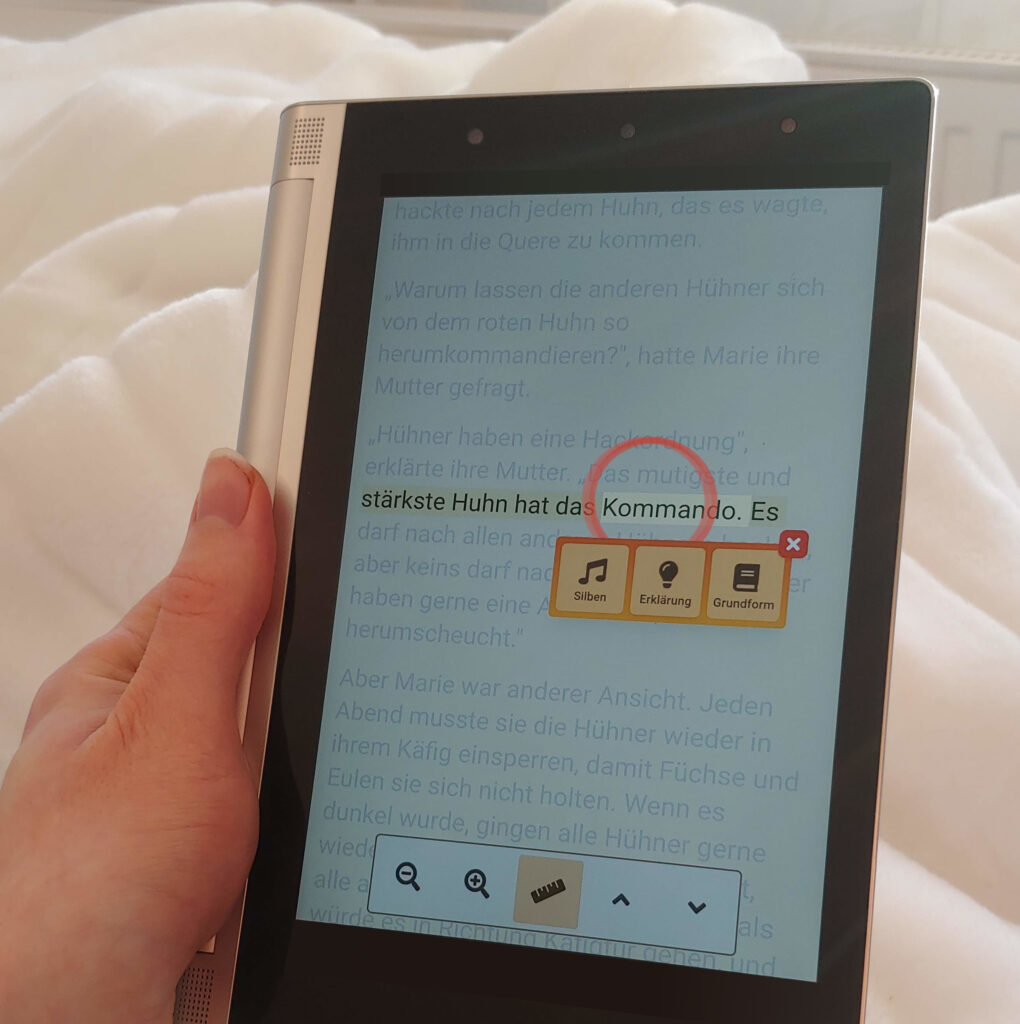

ABILITY – AI for Accessible Digital Media

ABILITY is an EU project aims to design a comprehensive portable solution to tackle current accessibility issues of digital content by the Blind and Visually Impaired users (BVI), including the deafblind.

To achieve that, a multisensory device (tablet) will be developed. The intended tablet exploits AI, especially computer vision technologies, to analyze visual contents and translate them into combinations of tactile, visual and/or auditory feedback. Optimal interactive support scenarios will be investigated with BVIs and BVI assistants through consecutive user studies. Eventually, BVI-AI interaction will be improved, and consequently, BVI-Computer interaction will be optimized by eliminating the existing barriers for BVIs in education, employment, leisure etc.

Main Research topics:

- BVI user modeling

- AI to improve BVI-Computer Interaction

- Interactive adaptive AI-based User Interface (Adaptive UI)

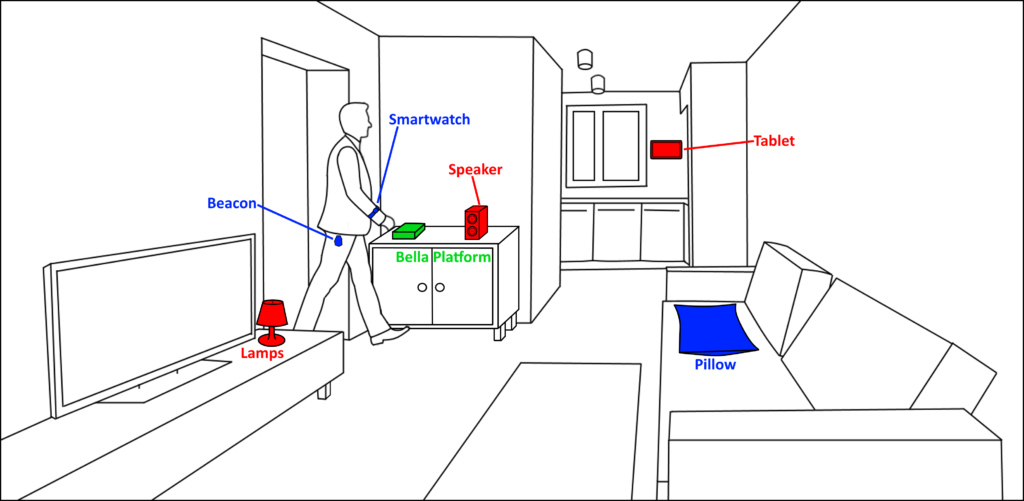

Debugging for End Users in Smart Environments (DEUS)

Over the last decade, smart home technology (SHT) has become an integral part of modern households. As a result, smart home ecosystems blend with daily social life, appropriated and integrated into personalised domestic environments. However, instead of infrastructurally complex and expensive in-built smart home packages people prefer building modular ecosystems, suitable for gradual adoption and expansion. Along this trend emerges the task for the end users to configure and establish desired automations in the systems — and to debug those setups until they work as intended. As new components are added, or the user’s needs change, old automations may no longer be adequate and require further debugging. Furthermore, faulty systems need to be diagnosed and reconfigured, or replaced. Unlike the initial setup, debugging becomes an on- going task for the end user. Yet once the excitement over new technology wears off, debugging these complex systems quickly becomes tedious, another housekeeping chore that is frequent enough to annoy, yet too rare to remember all the technical intricacies of particular systems.

In our research, we aim to determine new directions for smart home ecosystems to provide their inhabitants with agency to shape, appropriate and troubleshoot the technology they live with. The DEUS project tackles this challenge by unveiling users’ mental models of smart home automations, documenting existing debugging strategies, and proposing new interaction techniques and concepts for digital tools to better support these tasks. Our findings will be reflected by prototypes of new technologies and tools for smart home administration, which we evaluate in user studies.

Contact: Mikołaj P. Woźniak

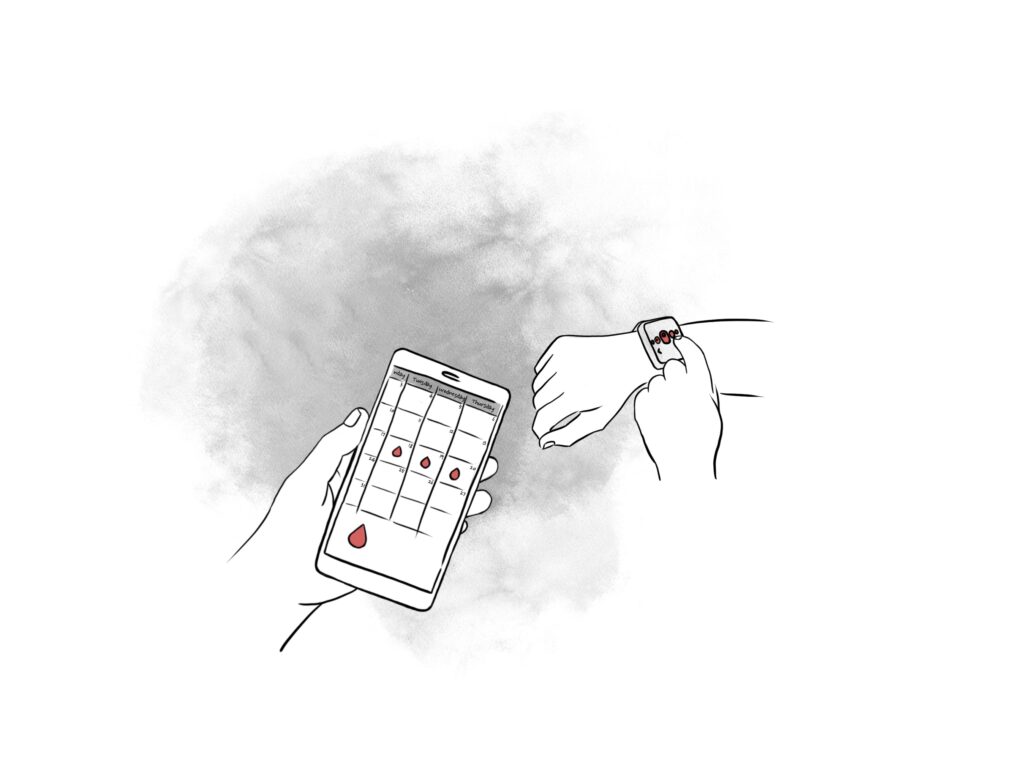

Transparent Usage of Personal Health-Data

Monitoring, tracking and controlling individual health is becoming increasingly popular through fitness trackers and apps. But what happens to this data?

Nowadays, our individual health data is mostly in the hands of health insurance companies, doctors, and health care facilities, and is not managed by each person individually.

Since the collected data is sensitive and considered to be worthy of special protection, it is currently very difficult to allow other institutions to use this data, even though the users could benefit from sharing it, for example, in their regular health care.

The Health-X dataLOFT and NFDI4Health project aim to empower citizens to provide, control, and better make sense of their own health data. With the dataLOFT platform, users will be able to work with their data in an ecosystem of health applications that uses GAIA-X standards to ensure data security and privacy.

As part of the project, future users will be surveyed, and prototypes of health applications will be created and tested based on the needs captured.

A user-centered design process aims to empower users as individuals to understand the use of their data, trust the process behind it, and establish responsible usage of that data.

Main research topics:

- User-centered design

- Health data

- Smart Wearables

Contact: Sophie Grimme and Uwe Fischer

Completed Projects

BUKI – Bürgerfreundliche Dokumentenausfüllung basierend auf KI

In the public sector, application forms and documents are often so complex that they are difficult for citizens to understand. As a result, citizens who are dependent on municipal assistance often have difficulty filling out these forms correctly. This can result in citizens not receiving the benefits to which they are entitled, or receiving them only after a delay. The BUKI project is developing an AI-assisted system that helps citizens fill out complex application forms. It focuses on one exemplary public service: the housing eligibility certificate (Wohnberechtigungsschein).

BUKI is a retrieval-augmented generation (RAG) chatbot designed to guide users through the application process with clear and simple explanations. It can extract relevant information from uploaded documents and automatically complete application forms using an accurate OCR feature. In addition, BUKI supports multiple languages and can generate a finalized PDF form in German.

Beyond its technical capabilities, the project also explores ethical implications of using AI in the public sector, including data protection and data sovereignty. The ultimate goal of BUKI is to make access to public services easier, particularly for people who rely on municipal assistance.

Acceptance and Social Interaction of Humans with Automated Vehicles

This project explores the acceptance and cooperation of ACPS in the safety critical domain of mobility. Soon, there will be mixed traffic scenarios in which autonomous vehicles will coexist and cooperate with human traffic participants, including pedestrians and cyclists. Many interactions in urban traffic are not or only weakly legally regulated and observed. Instead, interactions of traffic participants are embedded in established social norms and practices. In this research project, we aim to understand the role of social norms for the recursive relationship between autonomous vehicles and the behavior of human traffic participants. How will automated and autonomous vehicles change social practices of cooperating in urban traffic scenario? Which social cues in addition to the technical signals of an automated vehicle are necessary for the acceptance of autonomous vehicles in daily road traffic? To answer these questionsthe project draws on theoretical insights from behavioral social science and computer science. In methodological terms, we implement a rigorous set of experimental designs, ranging from large-scale online experiments to VR- and field-scenarios.

Main Research Topics

- Prosocial interaction between vulnerable road users and automated vehicles

- External Human-Machine Interfaces (eHMIs)

- Behavior towards automated vehicles

- Virtual reality experiments, field experiments, online surveys and online experiments

- Effects of social factors on prosocial behavior in traffic

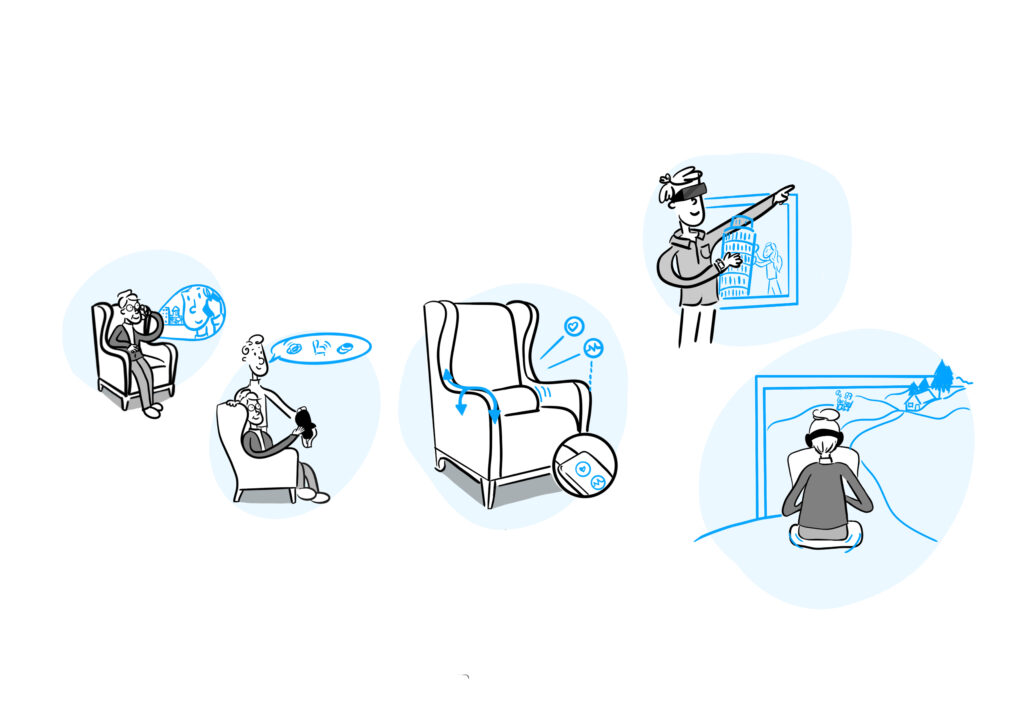

ZEIT – Zusammen Erleben, Immersiv Teilhaben

The aim of the joint project “ZEIT – zusammen erleben, immersiv teilhaben” (English: experience together, participate immersively) is to research and develop a multimodal, target group-centered immersion technology as a system solution for bridging the gap between elderly people living alone and their relatives.

In this project, social connectedness across separated or distant people will be strengthened through mixed reality and social cues. The focus is on the emotional aspects of interpersonal communication, such as the transmission of joy and happiness, contentment, discomfort, stress and worry, but also the typical physical interactions otherwise missing in communication via video and audio, such as the touch, hug, handshake to greet or pat on the shoulder to praise and confirm.

Therefore, the visually conveyed experience in the VR application is supplemented by tactile stimuli over large areas of the body. For that, programmable active textile actuators are used that do not require complex mechanical actuation, can be adjusted to suit the effect, can be integrated into digital systems, and can be seamlessly embedded in textile household objects. Processing responses to immersive stimulation using affective computing also enable the creation of a feedback system to other participants in multi-user scenarios. Furthermore, the integration into a familiar household object, the chair, allows the use of the overall system to be implicit and thus suitable for the target group of elderly people.

Health Informatics

An important key to aging healthily is physical activity. The aim of the project AEQUIPA is the development of interventions which promote physical activity in old age. Therefore, factors within a community which influence the mobility are being investigated and interventions developed. A particular focus lies on measures which are applicable to all socio-economic groups of people within the community.

Within the scope of AEQUIPA, OFFIS examines the application of technology-based interventions on the basis of sensor-detected vital parameters for the preservation of mobility of older people. Furthermore, OFFIS is working on detecting preventive measures for functional decline of muscle groups and is creating a system for monitoring and displaying physical activity.

Project websites: OFFIS AEQUIPA project page and aequipa.de

Understandable Privacy Policies

Emerging technologies are deeply ingrained into our day-to-day lives. To use a smart watch that reminds us to stay active or social media that connects us with friends and family, we accept and allow the access of our private data. Often, the privacy policies we agree to, comprise of long texts, written in a way that is difficult and time-consuming to comprehend.

The aim of the PANDIA project is to make the topic of data protection more interactive as well as understandable and to help users make informed decisions about the use of their data. As part of the project, we create prototypes for a PANDIA app and browser plugin, suitable for the everyday use. In addition, we investigate creative interaction methods and visualizations, offered by Augmented- or Virtual Reality and gamification techniques.

Main Research topics:

- UI/UX Design

- Mixed Reality technologies

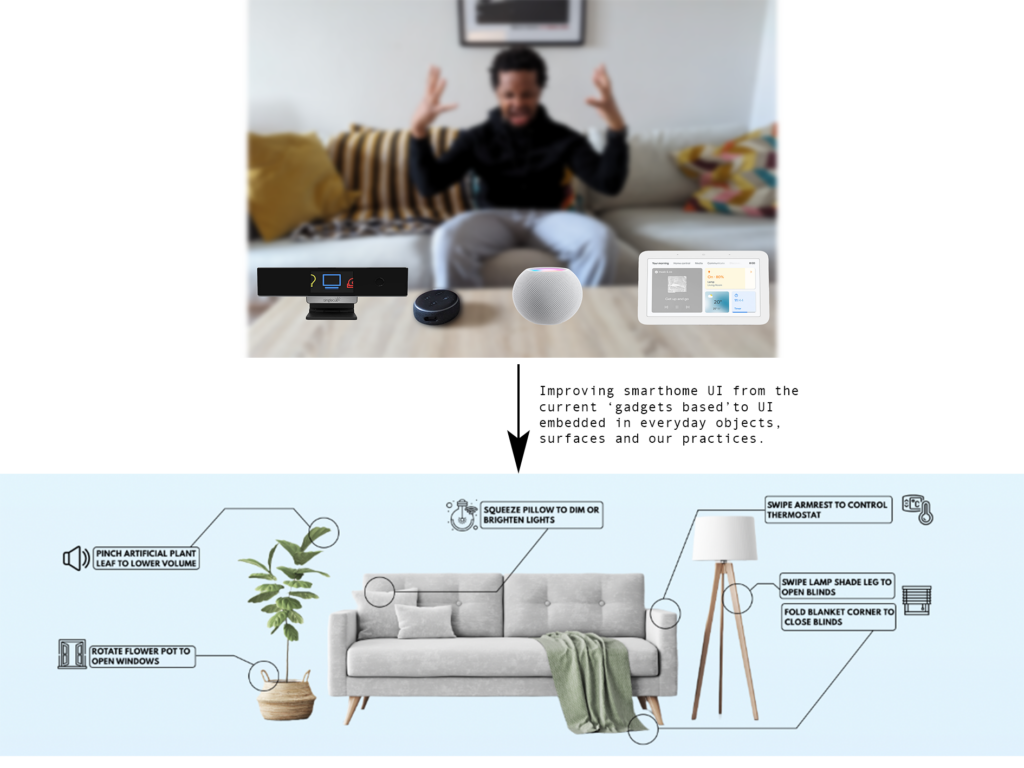

Rich Interactive Materials for Everyday Objects in the Home (RIME)

With the world gradually moving towards affordable smart home setups, new design and technical challenges are emerging. Each vendor has their bespoke interaction concepts and techniques which require learning and remembering.

These varying interaction concepts lead to users being frustrated, making mistakes, and have negative user experiences, ultimately resulting in the discarding of promising solutions. However, there is an opportunity to utilise artefacts and technologies naturally embedded into daily practices as a basis for new holistic control interfaces and mediums.

Therefore, the Rich Interactive Materials for Everyday Objects in the Home (RIME) project seeks to unlock the interactive potential for rich interaction with the materials in our smart environments.

The RIME project seeks to achieve this goal by designing, prototyping and evaluating scalable sensor and actuator technology together with touch interaction paradigms for seamless integration into everyday materials and objects to enable natural and scalable hands-on interactions with our future smart homes. As a result, the physical artefacts in our homes, such as chairs, tables, walls, and other surfaces, can be equipped with an interactive digital “skin”, or contain interactive sensor and actuator materials; and swiping along a table, to unfold it for additional guests may become a possible scenario.

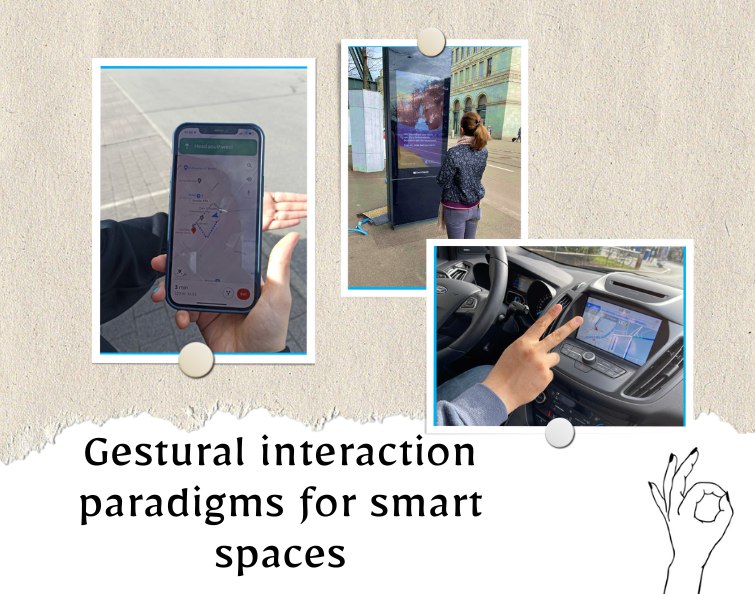

Gestural interaction paradigms for smart spaces (GrIPSs)

With the advent of intelligent environments (e.g. smart homes) and wearable computing technologies, 2D gestures slowly disappear and are replaced by more natural interaction modalities, such as voice or spatial 3D gestures, and take advantage of the whole body for interacting with pervasive computing environments. While 3D gestural interaction has been explored for many years now, there is no general vocabulary of gestures that is generalizable across different spaces and situations. There is also no metric to allow for a comprehensive assessment of the quality and usability of gestures in different contexts. We aim to understand and support gestures related to interactions, particularly in smart environments. Here, we will look at single gestures and gesture sequences carried out not only with one hand, but also bimanually and with the support of the whole body.

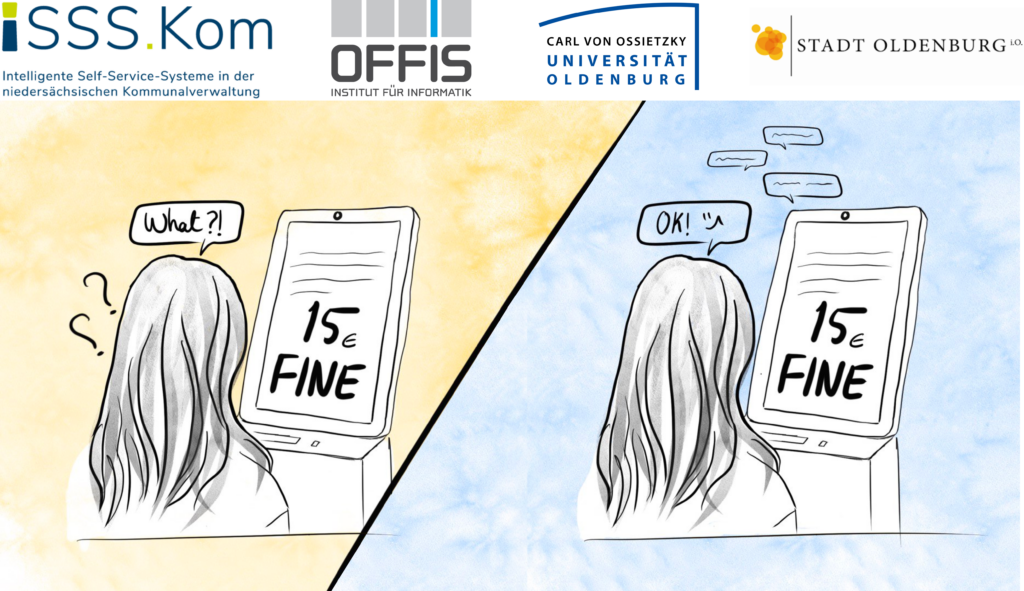

Intelligent Self-Service Systems (ISSS) in public sector

Self-Service systems are well integrated in our everyday life, e.g., self-service checkouts in retail. However, they seem to appear more in private sectors compared to public ones (e.g., civil service).

In the ISSS.KOM project, we investigate the effect of interacting with an Intelligent Self-Service System (ISSS) instead of a with human (bureaucrat) in public administrative processes, such as passport renewals or car registrations. In addition, we aim to examine the level of citizen satisfaction and acceptance of ISSS in such settings.

The project goals are to be achieved by designing an ISSS model that is visually and vocally responsive to humanns, their languages, expressions and emotions. We then will use the empirical approach to examine the efficiency of our created prototype in five different major cities in lower Saxony.

Gaze Behavior in Reading

We are interested in leveraging gaze behavior to infer user needs and support their interaction in an implicit manner. To this end, we focus on online interpretation of natural gaze patterns to predict user needs and intended interactions. Specifically, we aim to support children in learning to read by recognizing and mitigating comprehension problems in real time.

Main research topics:

- Inferring reading progress from gaze behavior

- Detecting reading problems in real time

- Assistive visualizations for word and sentence level decoding